Notes on Quant Infrastructures

Here is a short note on quant infrastructures - the layers of complexity involved in building such systems and what-not.

Before we go into that - over the next week we should have the Woox documentation and user example scripts live on the docs site! Our next target is either….OKX or Polymarket. Well, these are the markets I am currently getting involved in either way, so I will have to build out some infra.

Let’s get into it!

first thing to address is the necessity for such infrastructure. a few points

the smaller the player, the leaner the infrastructure (you should be involved in more niche markets for quick iteration of ideas and extracting pnl). in larger teams, job is split between quant dev/research and even pure softEng/qualAssurance while smaller teams can have individuals overlooking the whole stack.

the relative importance of infrastructure lies on the same scale of the source of pnl - inefficiencies necessitate for fast scripting while harvesting risk premias call for investments of infrastructure. this is because inefficiencies are typical of strategies that have high urgency and low scalability, while premias are generally extensible to nearby markets.

infrastructural developments are most heavily required in two areas - research infrastructure and execution infrastructure (overlaps exist).

Research Infrastructure

Research infra allows robustness in almost this exact process:

ideation

test of hypothesis

validation of hypothesis

and statistical analysis

Let's give an example for each. Suppose you woke up one day and had the suspicion that high volatility in one day should relate to higher volatility the next - that volatility is autocorrelated. You then suppose that position sizing based on volatility (vol-targeting) is a good idea. This is ideation.

You already have a strategy, but you were not happy with your risk management. You apply vol-targeting, and realize that this significantly improves risk profile and improves sharpe. This is test of hypothesis.

You need to validate your results. Your trial run was successful - but you could have been lucky. If your original sizing was random - and the new one was also random - you just flipped a coin. To get an idea whether the vol-sizing was performance additive, you permute the size vector and match them randomly to buy/sell decisions. In 99% of the permutations, your risk-controlled strategy was better. This is validation.

Based on this sizing-you can now extract statistics. What is the expected value of your trades, win rates, drawdowns et cetera - based on your characteristics, is this strategy viable or will you throw in the towel halfway down the drawdown period? This is statistics. This set of processes occur ever so frequently in a career of quantitative trading. If every time you need to perform such a mental exercise - you write new lines of code, you face issues of time efficiency and robustness. In the event that bugs/biases creep into your process, congratulations - you have just deployed the fee-eating machine.

To facilitate these research processes - there are a number of infrastructural needs, including data processing, feature generation, portfolio optimization et cetera. Again depending on the team size, these features can be collapsed into one architecture (small team) or expanded into multiple teams like data retrieval, archival and cleaning (large teams).

Another way to split research infrastructural demands come in the form of (i) simulation and execution (ii) simulation tangent operations. The job of simulations are to try to use archived data to simulate real world conditions as close as possible to test if a strategy is profitable. The latter is to answer equally important questions such as 'are option implied volatilities overpriced relative to realized volatilities when evaluated at expiry?'. The answering of this question would itself require the use of good program query language and scripting to extract the necessary options data from the database archive.

Infrastructural Design

We give further notes on infrastructural choice. The standard approach is to adopt the least complex solution to simulate to the necessary order of accuracy in regards to your strategy. For example - if it is trend following on monthly data, you can use a pen and piece of paper. If it is fx carry on short rate differentials, you probably need a computer program. If it is market making in EURUSD - you need sophisticated algorithms with latency, queue and estimations for adverse selection.

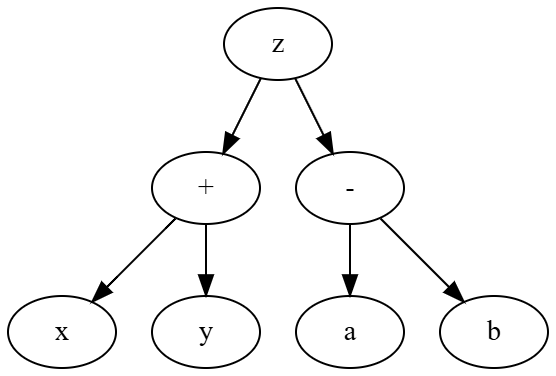

Research teams often implement their own testing engine using special languages and syntax. This often takes the form of encoding trading strategies as mathematical formulae/functions. This in turn is composed of operands and the operator itself. A simple example:

z=(x+y)∗(a−b)

can be expressed in tree form or other data structures. The encoding in suitable data structures allow for algorithmic manipulation of data. By substituting the operands with market data and extending the operands to generalized functions - a large set of strategies can be simulated and tested in a programmatic way once the engine is designed without writing new code. The benefit of this is that such encoding of rules into the framework of a testing engine prevents bugs from creeping in, and also makes it available for use of tuning such as optimization/fee/testing engines. A generalization of the tree data structure would be directed acyclic graphs representing logic flow of trading strategies. The language is specific to the logic being automated, the parser is specific the language, and the evaluator is designed to address the data being manipulated. An example of a parser performing such functionalities is documented here, where the testing engine allows specification of trading granularities, fees and usage of statistical tools with formulaic expressions.

A more nuanced approach has to be used when the effects being tested are subject to microstructural effects and subject to game-theoretic phenomena observed in high-frequency strategies. In these environments a simulator is designed to replicate these phenomena. A test/production environment is used as feedback to the simulation environment to improve the simulation engine's accuracy for future research. Statistical modelling and advanced mathematical research is employed for the study of fill probabilities, order latencies, queue behavior and predatory counterparties.

While the earlier infrastructures were boon to the research process - but not the source of pnl itself - in these environments it is not the case. The technological prowess of the infrastructure is itself the source of profits and drives the fund. Here the use of agent-based modelling is employed to reflect the message passing and interactions between different market entities at the sub-second levels.

Of course, a good understanding of these market participants lend a helping hand in building good execution algorithms in the face of market impact and competition.

To end off, I will include a different type of software infrastructure that are used by some. For the most part, especially small teams - I can only refer to this as unnecessary and mental masturbation. Some opt to build in-house proprietary terminals that extend beyond the scripting capabilities of testing engines to general operations. This involves defining a new language to aid in more general, non-trading operations. While often seen in commercial/open-source software such as the Hummingbot terminal, the amount of engineering required to maintain these software are unlikely to be worth it relative to the scalability of such projects for smaller groups. The engineering of these projects require understanding of compiler design, domain-specific language creation and interpreter development to come up with robust formal languages and parsers.