As we advance into our third year on this blog - it’s dawning upon me that many of the readers are getting left behind…the biggest concern by far is the complexity of the current Russian Doll model and not being sure how to proceed with using the statistical suite presented therein, together with the formulaic alphas.

Although I was intending to further take the Russian Doll to have integrated portfolio optimization methods, I think this is a fair time to step back and walkthrough the evolution to where we are today.

So the next series of lectures will be a lesson in history: how we have arrived at the powerful Python module that is. We will begin with a vanilla, random signal generating model and walk you through individual lines of code, as well as the design choices and logical behaviour. This should get you up to speed, before we charge ahead with more technical implementations for portfolio management. I am also creating video lectures and coding walkthrough - so if you would rather watch it in video format…you may skip this post. The lectures are due next month.

We will begin with the code, and walk you through line by line. We will also make some comments throughout the guide and tips on building larger scale systems, so you can build your own application scaling to more than tens of thousands of lines of code. (the code files are downloadable at the bottom of the post)

import pytz

import yfinance

import requests

import threading

import pandas as pd

from datetime import datetime

from bs4 import BeautifulSoup

def get_sp500_tickers():

res = requests.get("https://en.wikipedia.org/wiki/List_of_S%26P_500_companies")

soup = BeautifulSoup(res.content,'html')

table = soup.find_all('table')[0]

df = pd.read_html(str(table))

tickers = list(df[0].Symbol)

return tickers

def get_history(ticker, period_start, period_end, granularity="1d", tries=0):

try:

df = yfinance.Ticker(ticker).history(

start=period_start,

end=period_end,

interval=granularity,

auto_adjust=True

).reset_index()

except Exception as err:

if tries < 5:

return get_history(ticker, period_start, period_end, granularity, tries+1)

return pd.DataFrame()

df = df.rename(columns={

"Date":"datetime",

"Open":"open",

"High":"high",

"Low":"low",

"Close":"close",

"Volume":"volume"

})

if df.empty:

return pd.DataFrame()

df["datetime"] = df["datetime"].dt.tz_localize(pytz.utc)

df = df.drop(columns=["Dividends", "Stock Splits"])

df = df.set_index("datetime",drop=True)

return df

def get_histories(tickers, period_starts,period_ends, granularity="1d"):

dfs = [None]*len(tickers)

def _helper(i):

print(tickers[i])

df = get_history(

tickers[i],

period_starts[i],

period_ends[i],

granularity=granularity

)

dfs[i] = df

threads = [threading.Thread(target=_helper,args=(i,)) for i in range(len(tickers))]

[thread.start() for thread in threads]

[thread.join() for thread in threads]

#for i in range(len(tickers)): _helper(i) #can replace the 3 preceding lines for sequential polling

tickers = [tickers[i] for i in range(len(tickers)) if not dfs[i].empty]

dfs = [df for df in dfs if not df.empty]

return tickers, dfs

def get_ticker_dfs(start,end):

from utils import load_pickle,save_pickle

try:

tickers, ticker_dfs = load_pickle("dataset.obj")

except Exception as err:

tickers = get_sp500_tickers()

starts=[start]*len(tickers)

ends=[end]*len(tickers)

tickers,dfs = get_histories(tickers,starts,ends,granularity="1d")

ticker_dfs = {ticker:df for ticker,df in zip(tickers,dfs)}

save_pickle("dataset.obj", (tickers,ticker_dfs))

return tickers, ticker_dfs

from utils import Alpha

period_start = datetime(2010,1,1, tzinfo=pytz.utc)

period_end = datetime.now(pytz.utc)

tickers, ticker_dfs = get_ticker_dfs(start=period_start,end=period_end)

alpha = Alpha(insts=tickers,dfs=ticker_dfs,start=period_start,end=period_end)

df = alpha.run_simulation()

print(df)The entry point begins with

period_start = datetime(2010,1,1, tzinfo=pytz.utc)

period_end = datetime.now(pytz.utc)Here the most pertinent issue is the setting of a timezone - throughout our application, you may be using data from different vendors and/or time alignment, such as New York/Tokyo timezones. If you have a timezone-less datetime, it is ambiguous as to when the actual data was sampled. In particular instances where you are working with intraday data, not being aware of the timezone in which the time-series were sampled invites lookahead-bias and other deadly errors. Here we will be working with daily data, so we can safely set to the UTC standard - we shall be consistent across our whole application, so that we can make fair comparisons across application logic. The next line follows:

tickers, ticker_dfs = get_ticker_dfs(start=period_start,end=period_end)triggering

def get_ticker_dfs(start,end):

from utils import load_pickle,save_pickle

try:

tickers, ticker_dfs = load_pickle("dataset.obj")

except Exception as err:

tickers = get_sp500_tickers()

starts=[start]*len(tickers)

ends=[end]*len(tickers)

tickers,dfs = get_histories(tickers,starts,ends,granularity="1d")

ticker_dfs = {ticker:df for ticker,df in zip(tickers,dfs)}

save_pickle("dataset.obj", (tickers,ticker_dfs))

return tickers, ticker_dfs We may ignore the try block for now, which simply looks for a data cache on our computer disk. The called function:

def get_sp500_tickers():

res = requests.get("https://en.wikipedia.org/wiki/List_of_S%26P_500_companies")

soup = BeautifulSoup(res.content,'html')

table = soup.find_all('table')[0]

df = pd.read_html(str(table))

tickers = list(df[0].Symbol)

return tickersgoes to the URL specified and grabs the first <table> HTML block. You can visit the website on your Chrome or Safari and do F12 to open the developer console and inspect the associated HTML. Hover over the table…that is the HTML table we are grabbing, which we dump into an Pandas dataframe and extract the tickers from.

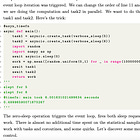

We make call to get_histories, which isn’t all too interesting, except see this few lines here:

threads = [threading.Thread(target=_helper,args=(i,)) for i in range(len(tickers))]

[thread.start() for thread in threads]

[thread.join() for thread in threads]

#for i in range(len(tickers)): _helper(i) #can replace the 3 preceding lines for sequential polling The first 3 lines essentially does the multi-threaded version of the sequential request done in the commented out for loop. Instead of going through the requests one by one and waiting for the network requests to complete, we fire them all at the same time by spinning up worker threads. Here, we cannot use asyncio because the yfinance API used is blocking - the event loop will be blocked - check our paper here on more notes:

Next interesting function is get_history. Recall our timezone UTC standardisation:

df["datetime"] = df["datetime"].dt.tz_localize(pytz.utc)This takes a non timezone aware datetime index in the pandas dataframe and makes it timezone aware. It is good practice to get rid of columns you won’t need:

df = df.drop(columns=["Dividends", "Stock Splits"])which saves RAM…and this can make the application more performant in subtle ways. For instance, our cache and RAM will have less unnecessary data. We will get onto performant programming in the coming posts. Here’s a tip for writing scalable code: be sure to standardise the schema (data type, data pattern etc) for objects that are passed between various components in your software. Here, the dataframes will always be passed with the timezone aware datetime index datatype:

df = df.set_index("datetime",drop=True)Following this principle we can create powerful wrappers that uses a common interface across multiple external APIs…recently a reader commented that this post was a total game changer for them:

Each method or function should have a predefined interface, that specifies the what is guaranteed by the inputs and outputs.

Continuing….